Analyze, Assess, Report: A Guide to Risk Assessments for Data at Rest

| Contact Us | |

| Free Demo | |

| Chat | |

Regular data security risk assessments are a core component of many regulatory compliance requirements, internal policies, or confidentiality agreements. Follow this guide for an effective, phased approach to data at rest risk assessments.

Many organizations are required to perform annual risk assessments driven by regulatory compliance requirements. Part of these requirements may be to ensure that customer data (PCI, PII, PHI, etc.) is protected. Data security assessments are important in order to understand if effective controls are in place to protect data while in transit, at rest, and in use. Some organizations also use these risk assessments as a tool to jump start their DLP program.

Today, we will focus on describing how to perform a risk assessment for data at rest:

- How to define the scope of your risk assessment and its requirements

- Who should be involved from an operational, legal and executive perspective?

- What is the most effective approach?

- What deliverables and reports should be produced?

Defining the Scope for a Data Security Risk Assessment

The scope of risk assessments is generally driven by regulatory requirements. Different regulations and compliance mandates will have various requirements around data creation, usage and access as well as data storage, retention and destruction. Different data types will have different data owners, custodians, users and applications. In order to successfully implement a thorough risk assessment, it’s extremely important that the following basic questions are addressed and incorporated into the assessment scope:

- Who is the data and business owner? As the assessment progresses, it will be critical to understand who owns the data and who owns business processes around the data. What departments will be included in evaluations (e.g. HR, Legal, IT, Governance)? What are their roles in the assessment?

- What types of data? If the assessment is focused on identification of PII, what would constitute personally identifiable information for your organization: employee SSNs, addresses, phone numbers or customer information? If the assessment is focused on PCI: credit card numbers, PAN, CVV or something else?

- Where is the data? Will data discovery involve scanning databases or repository shares, FTP and SharePoint sites, cloud repositories or user endpoints? Understanding high level business processes and data flows would help to determine where potentially data is stored and how it would be exposed.

- How is data handled? Before initiating an assessment, it would be important to understand proper data handling procedures and existing protection controls. Data assessments generally produce a large number of events so it’s important to have a good understanding if a “violation” is part of standard business process or actual security gap.

The key to success is not just to follow correct methodology, but also to implement a phased approach that will map to organizational priorities. If finding PII data is a critical priority and PCI secondary, it would be prudent to focus on PII first as it is extremely difficult to perform two assessments simultaneously. It would also be impractical to deal with two large sets of data and different remediation efforts. “Boiling an ocean” is NOT a prudent approach to data assessments and security in general.

Taking a Phased Approach

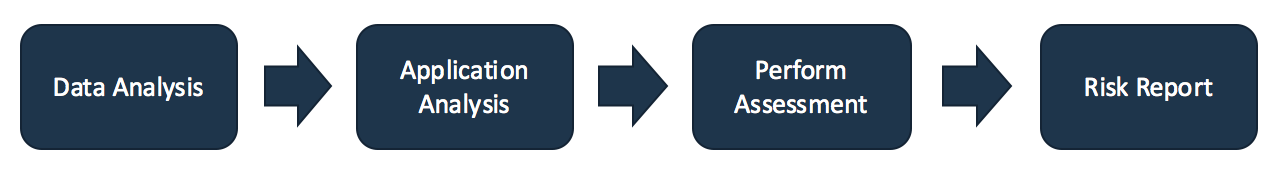

Once a high level scope has been outlined, the data assessment will be carried out in 4 phases: the data analysis phase, application analysis phase, assessment performance phase and risk report phase. Each phase is described in detailed below.

Data Analysis Phase

The Data Analyses Phase is very important and should not be overlooked. Many organizations do not spend sufficient time in this planning phase and jump directly into scanning, e.g. attempting to jump straight into performing the risk assessment. Poor Data and Application Analysis will almost always lead to erroneous results and gaps in mitigation strategies.

The Data Analysis Phase provides a framework to identify data owners and associated data elements, and provide a mapping to data classification and business process flows. The key elements and definitions for this phase include:

- Data Owners – an entity (individual or business unit) with statutory and operational authority for specific data that is responsible for establishing controls for its generation, collection, processing, access and destruction. Example: “Data owner for HR information is Bob Smith.”

- Data Types and Attributes – Define information types and attributes that describe data in scope. Examples of data elements for regulated data include:

- EMR/Patient information

- PHI/ePHI/SSN

- NPI

- Credit card numbers

- CVV numbers

- PIN/PAN

- Customer information (First + Last name, phone numbers, addresses)

- Sexual orientation and religious affiliation

- Data Classification – Classification of data is based on its level of sensitivity and the impact should that information be compromised, modified or accessed without proper authorization and authentication. For example, a healthcare organization may have the following classification scheme:

- Public data – No impact if information is lost. Examples would include published press releases, research publications and health recommendations.

- Private data – Moderate risk with loss of this information. Examples would include purchase orders and business presentations.

- Restricted data – Significant risk and impact with loss of this information. Examples would include customer names, addresses, SSNs and other data protected by state or federal privacy regulations or confidentiality agreements.

- Environment – Define individual locations or regions where the data resides. Based on your company operating model, locations can be defined as individual states (CA, TX, OR) or geo locations (NA, APAC, EMEA). Different locations may have different laws and regulations around data protection requirements, so it’s important to understand the nuances of data privacy laws in different regions.

- Applications – Identify the list of applications that may “touch” data in the scope of the assessment. Ensure the application list is comprehensive and includes all applications that are involved with data creation, movement, collaboration and access, along with secondary applications (such as QA and backup).

- Data flows – Define individual data flows based on the data and application use. Once the environment and applications components are determined, logical data flows should be created to effectively map those attributes to protection controls for later evaluation.

- Protection Controls – Identify and provide mapping of existing data protection controls around the data in scope. Based on different factors, controls can vary. For example, dormant data might have encryption requirements, whereas live data will have file integrity and encryption controls.

- Find PCI data in places not expected or desired

- Classify PII data in various repositories to ensure compliance

- Provide an overview of who is accessing and using customer data

- File data ownership

- Repository type

- File permissions and metadata information

- Historical file access information

- File type and location

- Encryption information

- Type of scans – There are various types of scans that can run against network shares, databases, SharePoint, and cloud repositories. Correctly identifying the platform where your data resides will help you select appropriate scans to run.

- Detection methodology – Content or file identification can be based on pattern identification, dictionary terms, meta file properties, and registered content. Choosing a correct detection methodology or combination of them will greatly increase detection accuracy.

- Performance impact – Scans can be scheduled to run after the business hours, thus limiting performance impact. Discovery sensors should be placed near the data source to reduce network traffic.

- Top policy violations by geo location

- Top policy violation by storage repository type

- Top content matches by users

- Top content types violations by storage

- Top severity incidents by location

- Top repositories with improper permissions

- Top users by policy violation

- Gap policy and control violations

- Gap policy and business process

- Move files that violate business policy to a secure location

- Auto delete files that violate business policy stored in cloud repositories

- Apply proper file share permissions controls to public shares

To facilitate the data analysis phase and make it more effective and engaging, a data workshop is a good starting point. Data workshops should be organized in a small group format with designated business units and data owners. Small group collaboration will enable discussion around data ownership, threat vector identification, mapping with classification parameters and tying into organizational structure.

Application Analysis Phase

The Application Analysis Phase is an extension of the Data Analysis Phase. In contrast, where data analysis provides information about the data ownership, application analysis uses data ownership information and maps it to environmental and application use. In order to properly assess organizational data posture, it’s important to understand application usage with specific data types; where the data being stored, by what application and what controls provide data protection for specific sets of applications.

The Application Analysis Phase includes the following components in order to provide a full data lifecycle view with associated in-scope data:

Once completed, this phase will provide a clear understanding of the applications using your data, where your data is “supposed” to be stored, and what controls “should” exist to protect your data. This information will be used during the assessment phase in order to compare existing controls found during scans to expected controls identified at this stage.

Perform Assessment Phase

Performing an assessment should be an easy task, as the hard work around understanding the data ownership has been completed and data usage and protection controls have been identified and mapped into a comprehensive framework.

An assessment generally involves executing a number of automated data discovery scans across in-scope data repositories based on defined assessment requirements. Data discovery provides an automated approach to location, classification and remediation of sensitive data. By running discovery scans, multiple business policy violations can be identified, potentially exposing sensitive data and risk. These findings will later be used in reports as well. Here are examples of what automated discovery scans can provide based on your scope:

As a discovery scan runs across different repositories, each individual file is opened and analyzed against defined assessment policies. If a policy violation is found, an incident violation is reported. Discovery policies are constructed based on information gathered in previous phases. Scan results would not only include visibility into different files and content but also vital assessment information such as:

With this information being reported via policy violations, an auditor can create different reports to provide to management.

There are different considerations in configuring discovery scans. These options are important to evaluate as they can increase accuracy of assessment results and reduce network and user performance impact.

The time to execute all required scans is variable and execution time varies greatly depending upon the amount of data to be scanned. You should expect that individual sensors can scan approximately 2TB of files per day. Organizations may utilize multiple scanners to achieve higher crawling speeds and also support geographically dispersed environments. All individual sensors should report into a centralized console for effective policy and incident management.

Risk Report Phase

The value of performing a risk assessment lies not only in identifying where your sensitive resides, but also in pinpointing potential security protection gaps. This last phase uses a detailed analysis to look at scan results and violations, align results to existing business processes and data owners, evaluate against existing protection controls and provide solution recommendations. Solution recommendations may include the following:

Executive reports and dashboards

Dashboards and reports provide visual and detailed representations of findings and policy violations. Executive management might be more interested into visibility of the entire organization, functional business units, business processes violations and trend analysis. Security operations might want to know more detailed information around actual file location, content within the files and existing protection controls configuration. Additionally, the organization responsible for each compliance regulation might “ask” for specific reports such as data retention and destruction policy matches. Below is a list of common reports that are useful in data security assessment.

Incident management workflow

As policy and incidents violations are reviewed, management of incidents and notifications must follow detailed processes and workflows. This assures that all incidents are properly reviewed, addressed and mitigated at a later stage. Incident workflow will dictate what happens when an incident is raised; who needs to be notified, what is the process to handle the security violation and who should be remediating files or applying more security controls to mitigate the gap? An auditor should work with the organization to review sample incidents and establish proper incident management workflow as part of the Risk Report Phase.

Remediation methodology

Even though policy incident remediation is not part of data security risk assessment, remediation methodology steps should be considered as part of the assessment. As data, business processes, locations, user access and controls are reviewed and measured, an auditor can recommend data security controls. Controls can be as simple as deleting files where those files shouldn’t be, or more advanced practices such as active directory user/group clean up. Protection control recommendations will be driven by available people and technology resources. For example, some ‘quick win’ recommendations that can be implemented after a risk assessment include:

Summary

Whether a data security risk assessment is your annual compliance requirement or your first step in developing a world class data protection program, it provides effective measures of security controls for your data repositories. The more effort put forth upfront to understand data ownership, business process baselines and existing protection controls, the more value you get at the end with accurate detections, low false positives, and solid strategy to ensure continues data protection for data at rest.

Recommended Resources

All the essential information you need about DLP in one eBook.

Expert views on the challenges of today & tomorrow.

The details on our platform architecture, how it works, and your deployment options.