Most websites welcome traffic from all over the world, but traffic from bad bots is generally bad for business.

Although there is a myriad of good bots bouncing around the Internet, they are mostly outnumbered by bad bots doing dangerous things to websites, both large and small.

Learning to recognize bad bots in action, and keeping them from negatively impacting your own operations, is an extremely useful undertaking in today's environment. If you are serious about keeping these kinds of bots at bay, then this article should be of use to you.

In this article:

- What are Bad Bots?

- How to Detect Bots

- How to Stop Bad Bots

- Frequently Asked Questions (FAQs)

Photo by Lukas via Pexels

What are Bad Bots?

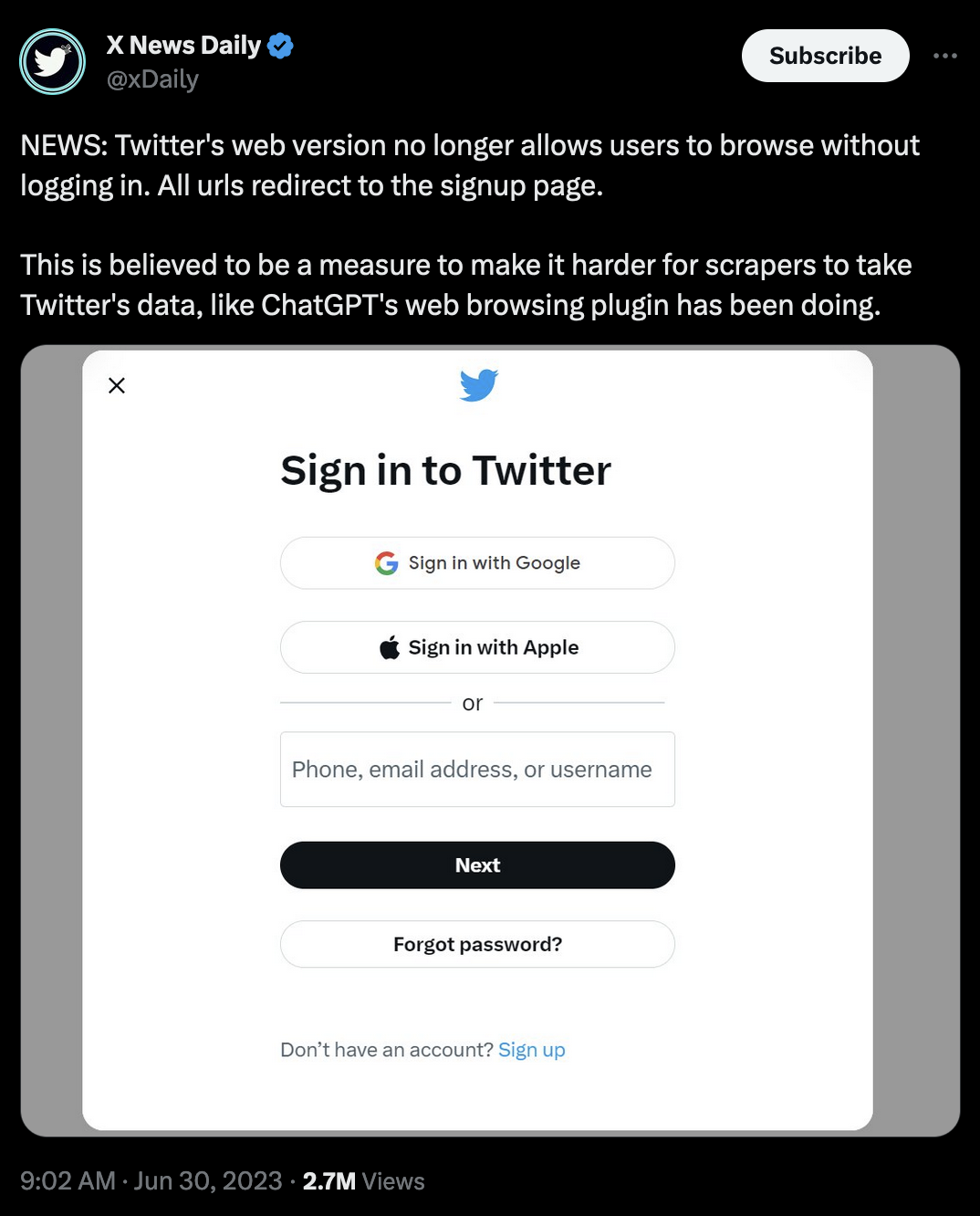

Bad bots are special applications designed to assist cybercriminals with their schemes at scale. These kinds of bots leverage speed and replication techniques to amplify simple attacks like data mining, brute-forcing, and ad fraud. For example, these types of bots are built to:

- Scrape website content

- Engage in DDOS attacks

- Brute-force their way through web-based forms

Among the milder offenses bad bots are guilty of are metrics invalidation and occasional resource overloading. But bad bots can also bring about full-fledged attacks on infrastructure, theft, and more if left unidentified and unmitigated.

Bad bots now collectively account for nearly half of all traffic online, and that number appears to be trending upward. Worst of all, most of these malicious bots are considered to be fairly sophisticated, meaning they are much more likely to evade detection.

Below, we'll cover bot detection techniques and mitigation best practices you can implement today, in addition to answering a few frequently asked questions on the subject.

How to Detect Bots

There are many ways to detect bots as they access resources within your network. Here are two options you should consider:

User Agents

To identify what application, vendor and operating system a visitor to an online resource is using, the 'User-Agent' header information included with their request is assessed.

Detecting bots by their user agent headers is a simple approach that works for any bots that announce their presence with a unique or otherwise identifiable name.

Unfortunately, many bad bots are using user agents normal humans would use to hide more effectively. This makes it necessary to use a different kind of clue to get a more comprehensive view of the bad actors on your network.

Photo by Negative Space via Pexels

Behavioral Indicators

Where user agent headers fall short in helping identify bad bots, behavioral indicators of all kinds can pick up the slack.

The only drawback to using these kinds of indicators is that they are not standardized. This means you will likely need to roll out your own solution or commit to using dedicated web defense software to leverage these kinds of techniques.

Here are a few examples of behavioral indicators you may find useful for identifying bad bots:

- Pageviews: Bots are often behind any super elevated pageview numbers you may be seeing on your website. Tracking visitors that access far more pages over a given time frame than anyone else can help you identify bad bots as they move.

- Region: When traffic to your web resource suddenly spikes, and most of it is coming from a specific region that doesn't make sense, it can be a good sign there is something fishy afoot.

For a bit more information about protecting your resources against bad bots, check out the following video:

How to Stop Bad Bots

The following techniques are fairly helpful for stopping bad bots in their tracks:

Allow and Block Lists

Setting up allow lists and blocklists can be challenging if you need to keep your resources available to most visitors around the world, but it can still be a fruitful undertaking if handled correctly.

Allow lists leverage user agent headers and IP addresses alike to identify any bots that are allowed to access a given resource on the web. All other identifiers that are not on the list are blocked automatically.

Block lists take the opposite approach to protecting resources from bad bots. These lists specify individual identifiers that cannot access a given resource, allowing for more granular access control.

Photo by Pixabay via Pexels

Traps and Challenges

For bots that cannot be identified easily, such as those that cycle through proxies or mask their origins by spoofing user agent header details, it's best to catch them in the act. You can do this by imposing a challenge only a human could complete as a trap.

CAPTCHAs are a nearly ubiquitous standard across the web for good reason — they confuse the overwhelming majority of bad bots. There are also many different types of tests that can be used to this end, such as simple word-based math problems bots can't easily solve.

Another tactic that is often employed is that of 'honey trapping' or using phony inputs to identify bots that don't know better than to fill them out. Once identified, bad bots can then be diverted to protect your infrastructure.

Bad bots make up a big part of the world's Internet traffic, but they can be handled fairly efficiently through the use of some of the techniques outlined above.

By mitigating the actions of bad bots, you can ensure better performance of your own web resources for real users and reduce the risk of data breaches or other mishaps in the process.