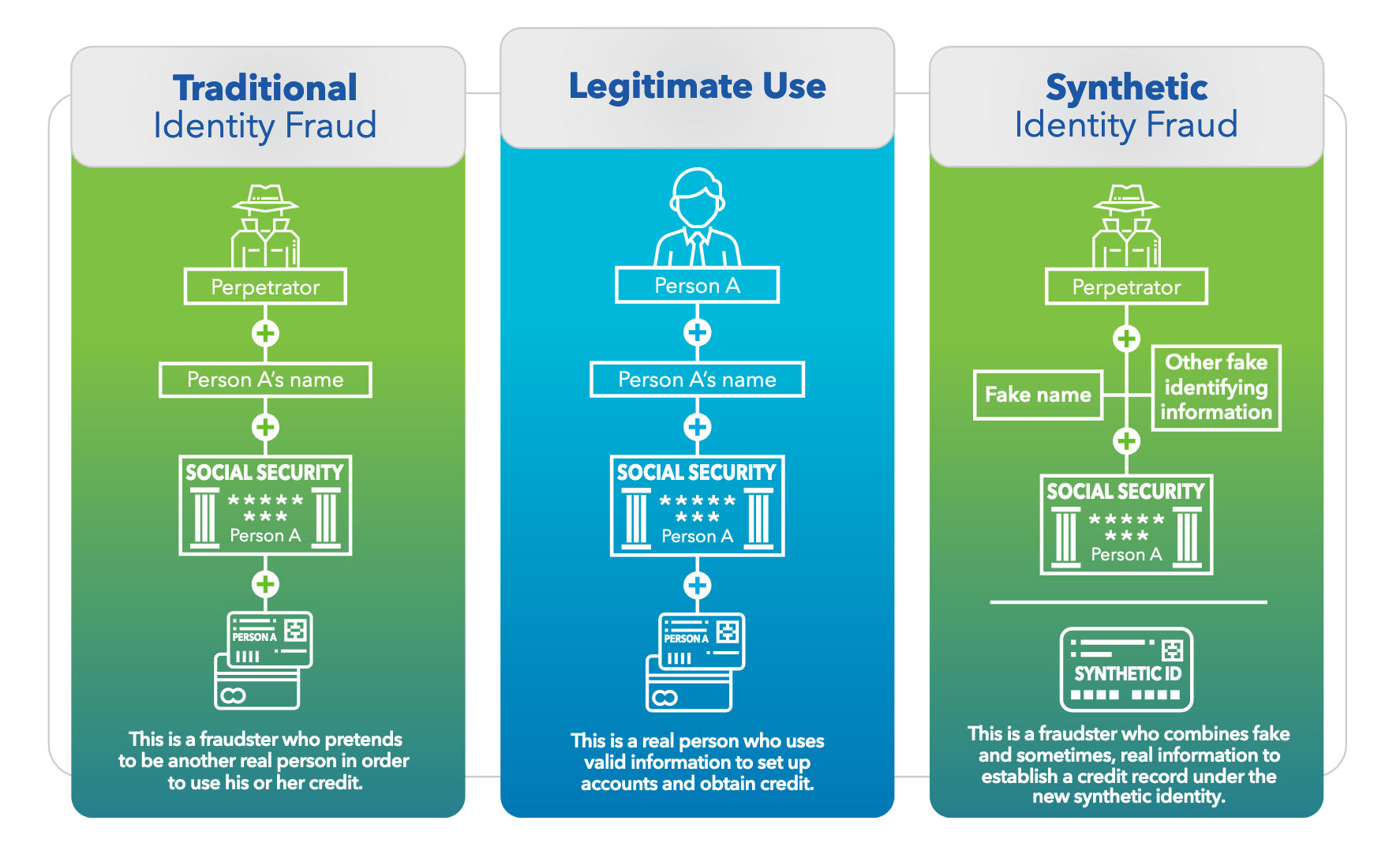

The Federal Reserve offered new tips this week around how organizations can mitigate synthetic identity fraud - when hackers combine information, some real, some fake, to create new identities that can be used to trick financial institutions and government agencies.

While not widely reported, the Federal Reserve cited recent analysis via Auriemma Group, a payment and lending advisory group, that synthetic identity fraud has kept U.S. lenders on the hook for $6 billion and led to 20% in credit losses.

To carry out synthetic identity fraud, attackers typically rely on personally identifiable information (PII) belonging to individuals who may not check their credit often – consider: social security numbers belonging to children or the elderly. Attackers can borrow bits and pieces of information, build trust, then take out a line of credit before disappearing off the grid.

In guidance released this week, "Mitigating Synthetic Identity Fraud in the U.S. Payment System," the Federal Reserve stressed that organizations should work together with other members of their industry to try and mitigate the threat collectively.

The document builds on two white papers previously released by the Federal Reserve.

While the synthetic identity fraud can impact multiple industries, as expected, the Federal Reserve mainly focused on the U.S. financial services industry.

Specifically, the Fed is encouraging orgs to rely on both manual and technological data. While drilling down on PII, like names, social security numbers, date of birth, can help, it's also beneficial to analyze relationships between banking instruments like checking accounts, lending accounts, to identify users that may have the same SSN, or accounts formed under the same IP address.

Artificial intelligence and machine learning, some of the industry's favorite buzz words, have helped detect synthetic identity fraud too.

Collaboration is key, though. The Federal Reserve, which talked to industry experts for its guidance, suggested that financial institutions need to break down "internal barriers" to allow the sharing of information across product lines. If a fraudster creates a fake credit card account, they may also try to start a fake direct deposit, line of credit, or loan, too.

If one thing is clear from the guidance, it's that there's no silver bullet. There are drawbacks to each mitigation measure. At one point the paper points out that while information sharing can be helpful, it's only as effective as the quality and integrity of the information itself. AI and machine learning can help but are not without their false positives - the report cites an ID Analytics study that found that traditional fraud models were ineffective at catching 85% to 95% of likely synthetic identities. Another tool, used by a credit union to flag fraud, identified 85% of credit applications that originated from synthetic identities.

The document does offer an idea to help insititutions validate identities without collecting or sharing customer data, but it'd involve some legal and compliance hurdles. The idea involves the formation of a "central repository that participants could query to obtain confirmation that a customer meets an age requirement, without collecting, storing and sharing that customer's actual PII.”

"Conceptually, this simple example illustrates a potential opportunity to remove barriers while limiting data security and liability vulnerabilities," the paper reads.