The boom in investment in threat intelligence companies is not surprising given the aggressive buzz in the market today. In an effort to safeguard your organization from advanced persistent threats and malware, the current market tells businesses to invest in threat intelligence and spend even more money each year so as to protect their networks against these agile threats that sneak around inside the network and conduct covert exfiltration of your most sensitive information. Sounds great, and they aren't necessarily wrong, but in my experience, building out threat intelligence capabilities requires organizational maturity and many other pieces before it can be valuable to an organization.

So before you decide to invest in threat intelligence or any threat feeds, you need to focus on your network first, because your internal data is a more valuable threat intelligence feed than anything a third party organization can give you. This article will get you primed for researching and understanding the data within your own network, which is vital in the field of threat research.

Basic Domain Name Categorization

So let's assume you're the person tasked with sitting in your chair and staring at domains/IP's all day. Day after day you're chasing down bad domains that you might be alerted on from security devices and monitoring tools. In many cases, simple categorization of DNS data can lead to a reduced workload and it can sometimes improve certain detection capabilities when looking for suspicious network behavior.

[Forensic Research Tip: Avoid Cognitive Bias]

“Don’t go looking for the badness, just tell us the story.”

At first though, our goal and results will not be with the intent of discovering malicious activity, but merely understanding how to tell the story of what our network is doing on a day-to-day basis. Over time, with some regular fine-tuning, your DNS network will be manageable to the point that it will be trivial to comfortably tell upper management what that story is. Maintain a forensic analyst mindset when conducting this research, and a beginner’s mind so that you minimize assumptions and create an exploratory environment. Once you know what the story should be, deviations from that story will become very apparent.

Popular Domains

Popular domains like Google, Yahoo, Facebook and the like we can categorize as stable, reputable, and commonly accessed domains – The Alexa top 1 million is a good starting point (downloadable here). Popular and well-known domains are great for using as a baseline for categorizing domains that are known to be generally trustworthy and may represent the bulk of your DNS traffic. Let's be very clear that reputable doesn't mean that abuse never happens, but we are classifying this by the fact that the domain itself is owned and used in a reputable manner by the public.

Here are a few starting points for analysis of popular domains. By asking questions about common or trending characteristics that are identified, they can help assess if the domain is something you decide to trust.

1. Are certain Domain Registrars more trustworthy than others?

Certain companies like MarkMonitor and CSC Corporate Domains Inc. provide domain registration services to legitimate companies, so is there a lower likelihood of abuse coming from those domains? What’s their vetting process? Is there data sets out there that can help rank their reputation? (Hint: Start sampling Domain name WHOIS data and matching these common registers and see which ones show up most commonly that verify the registrar.)

2. How old is the domain and does it align with verifiable information in regards to the company the claims ownership of the domain?

A common feature of abused domains involves a very recent registration date. Legitimate companies showing up in Alexa's 500k or even 1 million listed ranks will not be less than 1 year old.

3. How many of the Alexa 1 million popular domains populate domain requests from within my organization’s network?

If this is viable, maybe use this as a baseline whitelist.

4. How many popular domains map back to IP’s owned by the company?

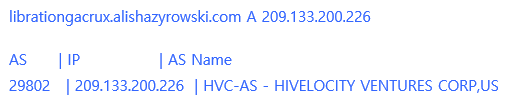

Mapping IP address history to whitelisted domains and logging and understanding changes and how common changes occur will allow you to get an understanding of dynamic yet reputable behavior. In it’s advanced form, this is called Passive DNS and can be extremely useful for identifying historical behavior of domains and their activity. Tools that allow you to map IP to ASN information become very handy in doing this in near real-time, such as Team Cymru’s IP2ASN lookup tool.

Figure 1: Example of IP ASN Mapping

It's important to note that WHOIS data is not verified except for the e-mail, so any data can be entered for the physical address, name, contact info, etc.

Content-Delivery Network Domains (CDNs)

Sometimes CDNs can put a wrench in your research early, so it’s best to address these types of domains now. There are many CDN networks out there, such as Akamai, Amazon Cloudfront, Cloudflare, Edgestream and a dozen others. And we need to account for them. Not all IP’s of popular domains will map directly to IP space owned by the registered company. Many IP addresses will be mapped to CDN providers and upstream providers and may be labeled as such. Rating reputation of these CDN’s may be something you may want to look into by analyzing sample sets of types of domains that use each provider. Many personal domain or non-popular entities may use providers such as Cloudflare more commonly than Akamai due to free service offerings Cloudflare makes available to smaller organizations that Akamai may not. This should factor in your statistics upon sampling.

Top-Level-Domains (TLD's)

Top-Level-Domain classification can be extremely useful within your organization and gives you visibility of the common domain requests within each TLD name. Simply put, start categorizing the most commonly used TLD's such as:

- .com (rated as 1, being most common)

- .net (rated as 2)

- .org (rated as 3)

- .edu (rated as 4)

- .gov (rated as 5)

- .mil (rated as 6, being least common)

Classification properties will be Standard or Non-Standard. If Standard, add its rating so that you can still use a metric when combined with other characteristics.

Experimental question: How many domains do you intentionally visit in your daily browsing routine that end in .biz or .info? What about .su, the old Soviet Union TLD that has been replaced by .ru but still found in use? Let's at least categorize them and understand if there is anything particularly interesting about sites ending in Non-Standard TLD’s. Not all of them are bad, but you might find some bad ones that you wouldn't have seen before, or a trend of newly registered bad sites in a given temporal period. Mapping and accounting for non-standard TLD’s can assist you in identifying deviations in network behavior.

Combining other features inside each Non-Standard TLD analysis set can sometimes enable further potential insight. A hypothetical example being:

{

1. All .TK sites requested in past month were called by less than 10 internal assets within my network.

2. Create dates of .TK domains are less than one year old.

3. IP addresses are not on whitelist from popular domain or trusted IP information.

}

Pivoting from here, you can then find other particulars such as any specific qualities surrounding the assets requesting these domains. For example, do they belong to a certain department within your organization such as the training department or HR?

Additionally, sorting TLD domains (both Standard and Non-Standard) to their current regional locations is usually suggested in general, which most SIEMs and network monitoring tools tend to do by default.

Dynamic DNS Domains

Dynamic DNS domains are designed to support dynamic changes in IP addresses, so you can host something from home on the Internet and not have to worry about your ISP changing your IP address and leaving you unreachable through your domain. These domains are available via a dynamic DNS service provider and while they are popular for legitimate reasons, they are also very commonly abused. A domain of this sort might look like:

Lancesite.dyndns.org or coolsite.dyn.com - note the subdomain “coolsite” followed by the dynamic DNS provider “dyn.com.” When we start identifying these on our network we are looking for the subdomain.

There are a massive amount of dynamic DNS domains out there and it's a good idea to be aware of how they are used on your network and by whom. For instance, your organization may have a restrictive policy in regards to connecting to unknown networks or using home network connections to purposely bypass the company proxy setup to manage and authorize outbound traffic. Many times dynamic DNS is used to do this and it’s definitely good to identify this type of traffic activity.

Start by identifying the name servers that are associated with dynamic DNS domains so that you can classify queries to those name servers from your network as requesting dynamic DNS domains.

Common dynamic DNS provides' name servers include:

- ns*.afraid.org

- ns*.dyndns.org

- ns*.no-ip.com

- ns*.changeip.org

- ns*.dnsdynamic.org

Below is a list of resources that attempt to provide a feed of all known dynamic DNS provider domains. By making a referenced list in your SIEM or network monitoring tool, simple detection can be performed by identifying any domain pattern matching [*].[dynamic_dns_subdomain_from_list].[TLD]. Fine tuning might be required.

- Emerging Threats: https://github.com/EmergingThreats/et-luajit-scripts/blob/master/dyndomains-only.txt

- Malware Domains: http://mirror1.malwaredomains.com/files/dynamic_dns.txt

Most security professionals choose to block and alert on all dynamic DNS activity on their network due to the sheer volume of abuse, however you should use the above lists, investigate the activity on your network, and draw your own conclusions.

Untrusted/Unknown Domains

Untrusted/unknown domains coming out of your network should definitely be inspected. There are many techniques for identifying and monitoring for new and unknown domains. Extracting your own DNS Cache from your DNS server to identify historically cached domains can be a great start to conduct passive research within your organization.

If you use BIND you can run the below command as sudo to dump the cache file to disk:

rndc dumpdb -cache

This should drop 'cache_dump.db' in the default directory that was configured in /etc/named.conf which is usually /var/log/*.

Then we can run these against a whitelist of common domains (such as Alexa’s list) and then parse out dynamic DNS domains, non-standard TLD’s, etc. After that we have the leftovers, which we can inspect and whitelist if they are linked to what we would consider legitimate and reputable features. Unknown domains are great because they open up possibilities for new and untapped research on the behaviors of newly registered domains.

Depending on the size of your organization's network you may have a lot of data in the mix, but over time as your “already seen” list grows, your signal-to-noise ratio for identifying and classifying new domains and their behavior becomes quite manageable.

Young Domains

When observing malicious domains of the past used in malware and phishing attacks there is frequent amount of “young” domains registered prior to the malicious campaign being executed. A trivial technique includes capturing the "create date" that the domain was originally registered. This information is found in WHOIS records and can easily be parsed for categorization and identification of new or young domains (keep in mind that doing this in bulk may require a WHOIS API service that requires purchase and is usually licensed based on requests). Multiple categorical levels can be designed such as:

- Less than 30 days (class 1)

- Less than 60 days (class 2)

- Less than 90 days (class 3)

- Less than 1 year (class 4)

- 1 year plus but less than 2 (this is optional and is used with other feature sets during research to identify edge cases or outliers)

I personally find it hard to imagine the marketing capabilities of any one reputable company that can successfully get their recently registered domain being requested by an asset within your organization’s network. These young domains we assume will be more of a rarity on your network, but will give likely more precise findings on their own that malicious behavior may be present. However, surprising corner cases might show up that are valid, such as CDN’s and ad networks adding a new domain space or cloud providers extending the domain name space.

Hypothetical Ariel View So Far

At this point – before we go too far ahead – you should be able to make a graph or dashboard either from your SIEM tool or simply by plotting the extracted data. I’m going to display a completely hypothetical example from thin-air to give you an idea of what a starting point may look like:

Figure 2: DNS Classification First Phase Graph Representation

This graph is a simple example, and many SIEM tools will allow you pivot to different views such as how many assets are requesting what domain types so that you can identify patterns or trends that occur on your network.

Verifiable Analysis in Action

To prove a quick point on the young domain characteristics above let’s analyze a quick sample of the most newly reported malicious domains at the publicly available site malwaredomainlist.com as of today’s date:

Figure 3: Malwaredomainslist.com Snapshot for Young Domain Analysis

The above screenshot is a sample of the front page of the most recently reported malicious domains at the time of writing this. We are going to reverse the analysis process from maliciously reported so you can see some of the unique features that do exist in domains that aren’t commonly friendly. We will pretend we found many of these within your network, identified as new or unclassified domain activity from the past week.

As you can see, some domains in this list are what I called “mixed” domains, meaning they are valid domains (such as the first one at the top of the list being identified as an educational (.edu) site in China) but likely hacked or misused temporarily. Examples like this one are usually used for directing user traffic to a short-lived exploit that will then push malware down onto vulnerable unsuspecting computers.

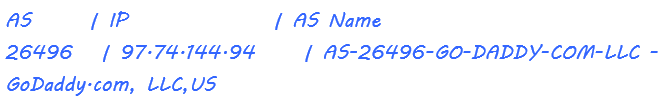

The second site on the list also can be identified as “mixed,” but looks like it was possibly taken over by someone taking control of their registrar or DNS settings. For instance, the original subdomain is registered by a user and appears to be registered over a year ago via GoDaddy. The subdomain’s matching IP is also hosted at GoDaddy:

Figure 4: Subdomain Assessment (truncated for brevity)

Brief website analysis shows a personal page was developed and the site page owner identifies herself as attending Ferris State University, which matches the general location of the WHOIS information provided for the contact info. Essentially nothing complicated, the website itself was last updated in 2014 as well as created then (greater than one year in age) and it identifies itself as a WordPress site. The IP address for this domain is also hosted on GoDaddy servers and is a typical setup for a personal website such as this:

Quick DNS lookup on the domain:

Quick IP2ASN mapping of IP matches GoDaddy:

Quick lookup info on the leaf domain:

Let’s take a closer look via passive DNS to see all historical activity to this domain and leaf to determine age of the leaf domain being added:

Figure 5: RiskIQ pDNS View of our Suspected Leaf Domain

Just as suspected the use of the leaf domain is fairly new compared to the create date of the original domain itself (2014 vs. creation and use of the leaf domain last month).

Now let's check all activity to this domain period:

Figure 6: pDNS History of Mixed Domain (RiskIQ View Panel)

Following the historical timeline and sorted by first seen, we see congruency with the historically accessed subdomain on 3-23-2014 which matches the create date of the WHOIS and the A record pointing to a GoDaddy hosted server and not changing since last seen on June 18, 2015. NS servers are same day aligned to ns[61-62].domaincontrol.com. As we get into 2015-06-08 we see our suspected 209.133.200.226 IP address being the destination for five brand new leaf domains – apparently being added same day and leaving the subdomain alone. This suggests the actors had access to control the DNS entries for the website. Let’s pivot onto the suspected hosting IP address and see what shows up there:

Figure 7: Sorted by First Seen we see a campaign of 180 leaf domains on multiple verifiable personal subdomains

For blog brevity I am truncating the list but there are literally 180 uniquely added leaf domains starting on 6-05-2015 through 6-30-2015 on sub-domains registered and managed at GoDaddy that, when checked for validity, can be classified as personal class/small organization owned domains that check out. Patterns that seem obvious include that they are hosted using WordPress, registered by GoDaddy, use NS servers at Domaincontrol.com and all started at the beginning of June 2015. We can safely classify these together as a clustered set of activity performed by one threat actor group. A Technique, Tactic and/or Procedure (TTP) I have noted is that this threat actor group is essentially creating their own poor man’s Dynamic DNS by taking over certain not-so-popular domains hosted and managed by GoDaddy and running WordPress.

The irony is that was just the second domain on the list and I was mainly trying to point out how commonly young domains are used by malicious domains involved in malware and we somehow went down a rabbit hole that was quite interesting. If we quickly continue down the list without following rabbit holes this time we can see some obvious young domains in action:

Figure 8: Going down the list (quickly this time)

Simply put I’m going to categorize these by unique subdomains and the domain origin create date I derived from the WHOIS data, its TLD type (Standard/Non-Standard), Alexa whitelist status (yes/no), Dynamic DNS status (yes/no) and Young Domain status just for basics with a final area for you to rate a classification:

Figure 9: Domain Classification Table

So this was quite easy in this case but you can build decision trees based on the weights of how you decide to classify the domain based on the collected and identified characteristics. Keep in mind, these are just the basic features based on registration data and whitelist data. The more articulately and precisely we break down the feature sets, we end up with an advanced understanding of the domains being requested within our networks and what they might be trying to do.

With this basic information it should be quite simple to build use cases within your SIEM tools to generate daily dashboards that classify these characteristics and can calculate a quick score for each domain. From there, fine tuning and more research can be conducted to identify the more advanced methods of classification.